In this project, we are building a unified hardware accelerator for cortically inspired algorithms. These algorithms are advanced machine learning algorithms that can be used in complex recognition tasks. Algorithms under investigation include the following:

- Long Short Term Memory (LSTM). This supervised recocognition algorithm uses deep recurrent networks.

- Hierarchical Temporal Memory. This algorithm, developed by Numenta, can work on unlabelled data. It emphasizes learning through understanding temporal and spatial associativity and uses as sparse representation

- Sparsey. This algorithm, developed by Neurythmics, emphasizes "one-hot" learning, associative memories and sparse representation. It can work on unlabelled data.

- Cogent Confabulation. This algoirthm is used to estimate the likelihood of an observation.

We also plan to look at Deep Convolutional Networks and Spiking networks.

This work is funded by DARPA and Google.

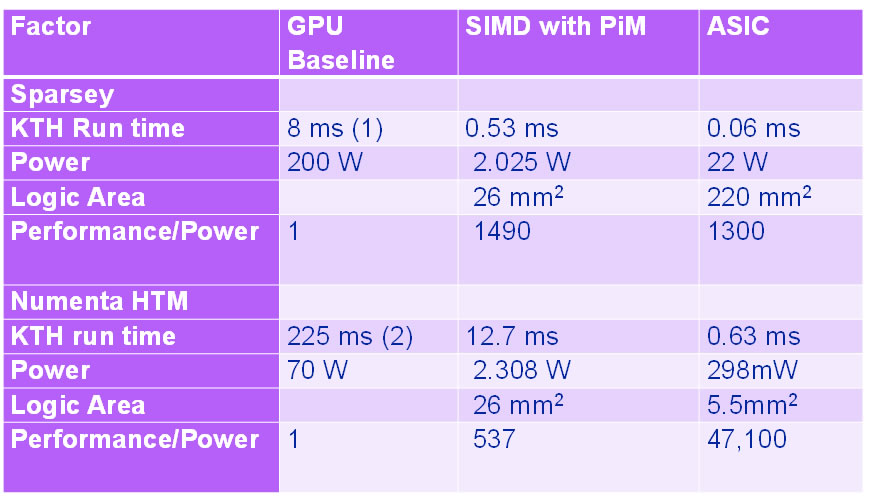

The table below shows some results to date. The KTH video action recognition benchmark as used. Three versions of Sparsey and HTM were built, one that runs on a GPU, one that runs on a custom SIMD processor, and another running on the ASIC. This table summarizes the improvement in performance/power using the hardware accelerators.